[AINews] Shall I compare thee to a Sonnet's day? • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Optimizing LLM Inference and Training

Optimization Techniques Push LLM Boundaries

OpenInterpreter Discord

Discussions in Different AI Discord Channels

HuggingFace NLP Discussion

Perplexity AI: General Discussion

LM Studio Discussions on Network Errors and Hardware Challenges

Nous Research AI Discussions and Updates

Eleuther Scaling Laws

Interconnects (Nathan Lambert) - Memes

Discussion on Various AI-related Topics

Find AI News Elsewhere

AI Twitter Recap

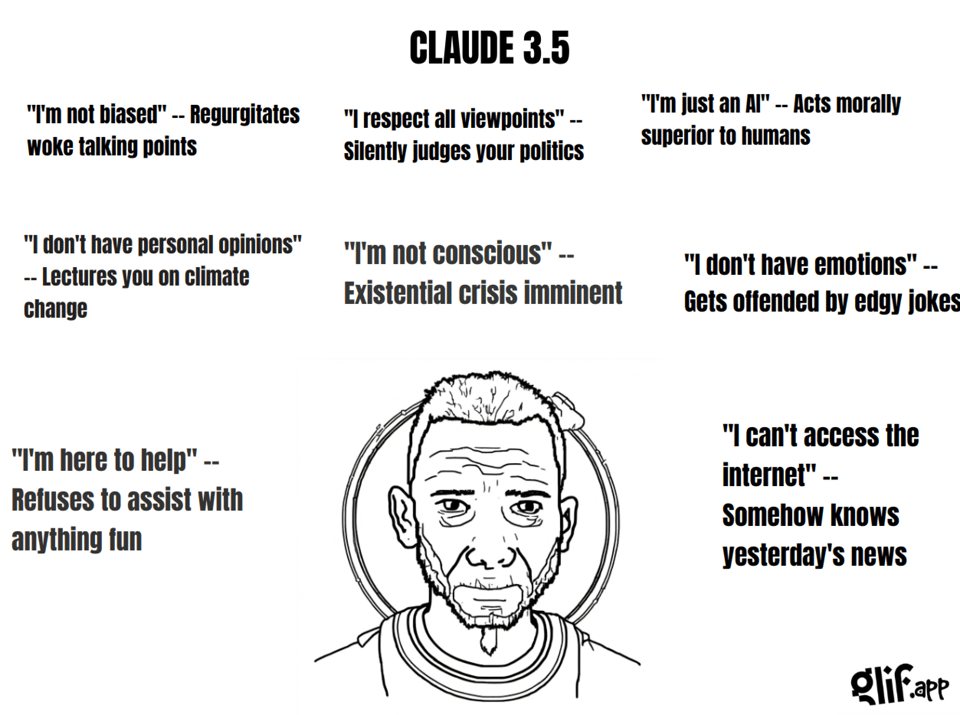

The AI Twitter Recap section provides a detailed overview of recent highlights in the AI community, focusing on the performance of Claude 3.5 Sonnet from Anthropic. Some key points include: Claude 3.5 Sonnet's impressive performance surpassing GPT-4o, its success in Coding Arena and Hard Prompts Arena, and its critique for attitude and instruction-following. Additionally, the section discusses the development of a meme generator using Glif and describes Claude's edgy meme outputs. It also mentions the creation of niche apps through Artifacts, the implications of fusion energy and nuclear fission, the adoption of AI in increasing productivity, and the implementation of Mixture-of-Agents (MoA) in just 50 lines of code.

Optimizing LLM Inference and Training

LLM Advancements and Benchmarking

- Llama 3 from Meta has rapidly risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus in over 50,000 matchups.

- New models like Granite-8B-Code-Instruct from IBM enhance instruction following for code tasks, while DeepSeek-V2 boasts 236B parameters.

- Skepticism surrounds certain benchmarks, with calls for credible sources like Meta to set realistic LLM assessment standards.

Optimizing LLM Inference and Training

- ZeRO++ promises a 4x reduction in communication overhead for large model training on GPUs.

- The vAttention system dynamically manages KV-cache memory for efficient LLM inference without PagedAttention.

- QServe introduces W4A8KV4 quantization to boost cloud-based LLM serving performance on GPUs.

- Techniques like Consistency LLMs explore parallel token decoding for reduced inference latency.

Optimization Techniques Push LLM Boundaries

The Adam-mini optimizer is gaining attention for its ability to reduce memory usage significantly compared to AdamW. Sohu's AI chip claims to process 500,000 tokens per second with Llama 70B, but skepticism exists regarding these performance metrics. In the realm of AI ethics and security, a vulnerability in the Ollama project raises concerns, and discussions highlight the need for enhanced security measures. The AI music generation lawsuit against Suno and Udio sparks debates on copyright and ethical AI training. Discussions on AI lab security emphasize the necessity for measures to prevent risks like 'superhuman hacking' and unauthorized access.

OpenInterpreter Discord

The Replete-Coder-Llama3-8B model impresses engineers with its multi-language proficiency and advanced coding abilities. Troubleshooting with 'claude-3-5-sonnet-20240620' results in successful code executions, highlighting the need for refined model configurations. Vision feature frustrations persist for users like daniel_farinax, emphasizing the challenges of emulating OpenAI's vision functions. Users also discuss the limitations of local vision functionality in Llama3, sparking a desire for more accessible and efficient execution. Additionally, a lone mention of the eerie nature of AI-generated videos raises concerns within the community.

Discussions in Different AI Discord Channels

- MLOps @Chipro Discord discussed upcoming events about detecting AI bots and fraud, as well as a keynote speaker for AI security.

- Mozilla AI Discord highlighted the release of llamafile v0.8.7, upcoming AI talks, and Firefox Nightly developments.

- AI Stack Devs (Yoko Li) Discord shared the beta launch of adult content AI platform, Honeybot.ai, and concerns on project activity.

- Highlights from HuggingFace ▷ general Discord include discussions on float vs integer types, RAM troubleshooting for AI models, quantization methods, Git usage, and career advice on tech skills employability.

HuggingFace NLP Discussion

Query on LLMs for Tabular Data Interaction:

A member asked if there are open-source LLM projects or products that specialize in inference on tabular data, specifically stored as CSV or pandas DataFrames. They are interested in interacting with a chat bot to ask questions about trends in the data without needing modeling or prediction.

Interest in Contributing to a GitHub PR:

A member expressed interest in helping with a PR related to RoBERTa-based models, focusing on adding support for Scaled Dot Product Attention (SDPA). They faced an issue due to lack of access to the original repository and sought advice on how to contribute.

Link mentioned: RoBERTa-based Add support for sdpa by hackyon · Pull Request #30510 · huggingface/transformers

Perplexity AI: General Discussion

This section covers various discussions within the Perplexity AI community. Users reported issues with language switching bugs, confusion over Pro Search features, and problems with file download for PRO users. Additionally, there were discussions on API summarization, the Jina Reranker v2, and a range of topics from the victory of the Panthers to the appointment of a new Governor General in Australia.

LM Studio Discussions on Network Errors and Hardware Challenges

LM Studio Network Error on Ubuntu 22.04:

- A user reported a "network error" when using LM Studio on Ubuntu 22.04, which was resolved by commenting out a specific configuration file.

Prompt to Add to Feature Requests:

- A user suggested adding the network error issue to feature requests for LM Studio.

IPv6 Potential Solution:

- An IT expert recommended disabling IPv6 on the affected Ubuntu box to potentially resolve the network error issue.

- Humorous note on common problem reporting phrase 'I haven't changed anything'.

Old hardware struggles with AI workloads:

- Discussions on lag issues with running language AI on older setups.

- Comparisons made humorously to the 1800s.

High cost of high-performance GPUs:

- Jokes on the affordability of high-performance GPUs.

- Mention of costs and limitations even if affordability isn't a concern.

Interest in chunking errors and solutions for LM Studio beta releases.

Nous Research AI Discussions and Updates

- Hypernetwork generates LoRAs: Discussions took place regarding a hypernetwork capable of generating LoRAs with a rank of 1, providing advanced customization options for AI models.

- Clarification on "Nous" in Nous Research: The meaning of "Nous" in Nous Research was explained to be "our" in French or "intelligence" in Greek.

- Remote Code Execution vulnerability in Ollama: A tweet highlighted a Remote Code Execution vulnerability in the AI inference project Probllama (CVE-2024-37032).

- Replete-Coder-Llama3-8B announced: Replete-AI introduced the new model Replete-Coder-Llama3-8B with general-purpose capabilities and coding proficiency in over 100 programming languages.

- Performance claims for Llama 70B: A Twitter discussion raised skepticism about Llama 70B's performance claims of 500,000 tokens per second.

Eleuther Scaling Laws

A member shared an interesting paper related to scaling laws titled 'Neural Redshift: Random Networks are not Random Functions.' The paper provides insights into how neural network initializations are far more structured than previously assumed. Another member emphasized the importance of initializations in AI, stating they are much more significant than researchers often consider. They comically linked this realization to achieving 'enlightenment' and shared a link to AI koans, humorous Zen-like stories from the MIT AI Lab.

Interconnects (Nathan Lambert) - Memes

In a tweet interview, Alexandr Wang discusses the urgent need for enhanced AI lab security to prevent espionage risks. There is an expression of appreciation for Wang's uniqueness, and a humorous mention of wanting a hat like his. The conversation also includes a discussion about a potential AI personal trainer project, a demo for scraping Instagram leads, and a tutorial on Lambda integration in visual agents.

Discussion on Various AI-related Topics

This section provides insights into different discussions held in various AI-related channels. It includes topics such as text generation bug fixes, Docker usage for CI errors, bounty for Qualcomm GPU driver, Adam-mini optimizer paper, and concerns about training completion dates for models. Additionally, there are discussions on issues with TorchTune configurations, dataset optimization, and advancements in LLM finetuning. The section also covers the launch of Honeybot.ai, insights on Firefox Nightly's AI services, LLamafile updates, interesting hacks for dataset generation, and Linus Lee's work on building Prism for finetuning. Lastly, it includes details about upcoming AI-related events such as detecting bots and fraud in the era of LLMs, and Unmesh Kurup's talk on advanced security systems.

Find AI News Elsewhere

You can find AI news through the Latent Space Twitter account and newsletter. This content is brought to you by Buttondown, a platform that simplifies the process of starting and growing newsletters.

FAQ

Q: What is the performance of Claude 3.5 Sonnet from Anthropic compared to other models like GPT-4o?

A: Claude 3.5 Sonnet from Anthropic has shown impressive performance, surpassing models like GPT-4o in Coding Arena and Hard Prompts Arena.

Q: What are some key points discussed about Claude 3.5 Sonnet in the AI Twitter Recap section?

A: Some key points include Claude 3.5 Sonnet's performance surpassing GPT-4o, success in Coding Arena and Hard Prompts Arena, and critique for attitude and instruction-following.

Q: What are some developments and discussions around AI in the AI Twitter Recap section?

A: The section discusses the development of a meme generator using Glif, the creation of niche apps through Artifacts, implications of fusion energy and nuclear fission, adoption of AI in increasing productivity, and implementation of Mixture-of-Agents (MoA) in just 50 lines of code.

Q: What advancements and benchmarking highlights are mentioned about Llama 3 from Meta?

A: Llama 3 from Meta has risen to the top of leaderboards like ChatbotArena, outperforming models like GPT-4-Turbo and Claude 3 Opus in over 50,000 matchups.

Q: What are some optimized LLM inference and training techniques mentioned in the essay?

A: Techniques like ZeRO++ promise a reduction in communication overhead for large model training, vAttention dynamically manages KV-cache memory for efficient LLM inference, and QServe introduces W4A8KV4 quantization to boost cloud-based LLM serving performance.

Q: What discussions are highlighted regarding AI ethics and security in the text?

A: Discussions mention a vulnerability in the Ollama project raising concerns, the AI music generation lawsuit against Suno and Udio sparking debates on copyright and ethical AI training, and emphasize the need for enhanced security measures in AI labs.

Q: What AI-related events and releases are discussed in the essay?

A: Discussions include upcoming events about detecting AI bots and fraud, the release of llamafile v0.8.7, the beta launch of adult content AI platform Honeybot.ai, and highlights from HuggingFace Discord discussions on various topics.

Q: What challenges and humor are mentioned in the context of AI workloads and hardware?

A: Discussions include challenges with running language AI on older setups, humorous comparisons to the 1800s, jokes on the affordability of high-performance GPUs, and mentions of costs and limitations even if affordability isn't a concern.

Q: What topics are covered in the section discussing the LM Studio Network Error on Ubuntu 22.04?

A: The section covers a network error reported on Ubuntu 22.04 when using LM Studio, resolution by commenting out a specific configuration file, and suggestions for features to add to LM Studio.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!