[AINews] Mistral Large disappoints • ButtondownTwitterTwitter

Chapters

Detailed Model Discussion

CUDA Mode Discord Summary

Consumer GPU and Technical Remedy Discussions

Mistral Models

Mistral Chat Discussions

LM Studio Discussions

Usage and Comparisons of Perplexity AI Models

HuggingFace Cool Finds

HuggingFace NLP

HuggingFace Diffusion Discussions

LlamaIndex General Chat

Latent Space Announcements

OpenAccess AI Collective (axolotl) Discussion Highlights

CUDA MODE ▷ #ring-attention

LangChain AI - Share Your Work

Fine-Tuning and Models Enhancements

External Links and Brought By Button

Detailed Model Discussion

The section discusses the launch of Mistral-Large, comparing it to GPT4 and the community's reception. Mistral-Small's performance compared to Mixtral 8x7B, technical hurdles like deployment and fine-tuning, commercial impacts, and user-driven design ideas. LM Studio's troubleshooting and multilingual support are also highlighted.

CUDA Mode Discord Summary

CUDA Under Fire

Computing legend Jim Keller criticized NVIDIA's CUDA architecture for lacking elegance and being cobbled together. The introduction of ZLUDA enables CUDA code to run on AMD and Intel GPUs, challenging NVIDIA's dominance (GitHub link).

Gearing Up with GPUs

Debates surfaced regarding GPU choices for AI with the 4060 ti being the cheapest 16GB variant and discussions revolving around the suitability of different GPUs for AI applications.

Consumer GPU and Technical Remedy Discussions

Discussions in this section centered around the consumer GPU market, particularly highlighting the <strong>3090</strong> GPU with 24GB VRAM as a strong option for Large Language Model (LLM) tasks. Topics also included strategies for buying second-hand GPUs and technical solutions for issues that may arise. Key discussions included Clarity on quantized computation methods, interest in Triton for Jax support, advancements in ring attention with Flash Attention Finessed, efficient LLM serving methods from CMU, learning efficiency through an MIT course, and CUDA-MODE lectures. Job prospects for CUDA and C++ experts were also mentioned.

Mistral Models

- GPU Essentials for Server Builds: Discussions on the necessity of GPUs for running language models efficiently, with recommendations for server builds.

- The Cost of Scaling Up: Considerations on the hardware requirements and costs associated with scaling up language models, including specialized hardware for larger models.

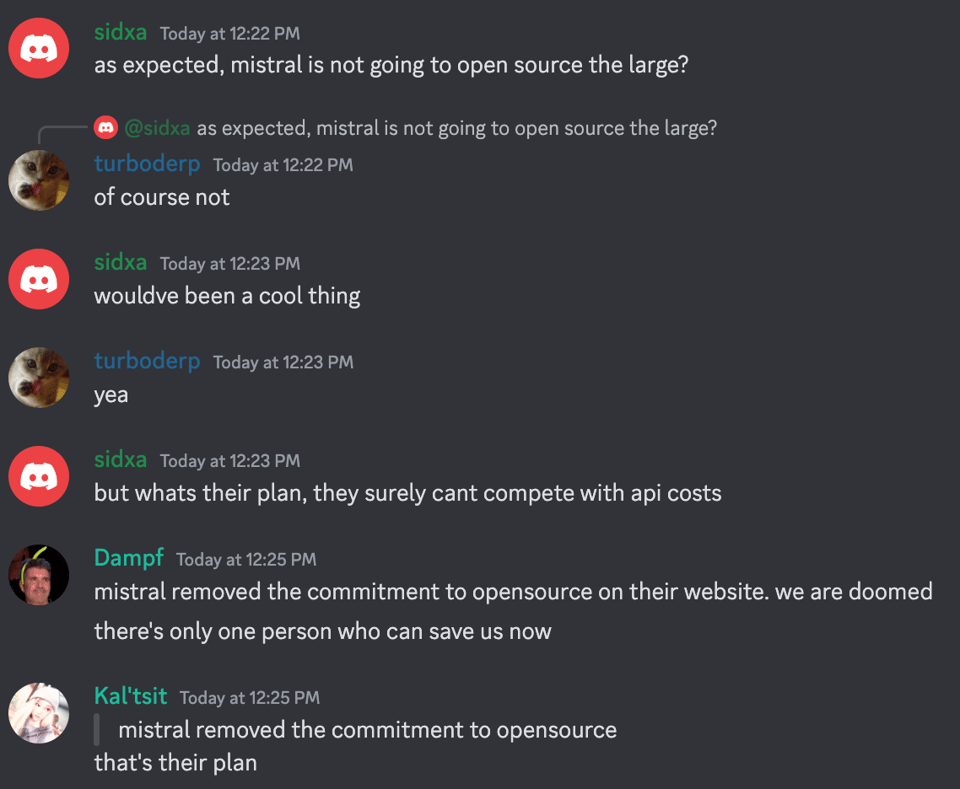

- Questions About Mistral's Direction: Community concerns and speculations on Mistral's shift towards closed-weight models and the potential for open weight models in the future.

- Benchmarks of Mistral Models: Testing and results comparison of Mistral's models, positioning Mistral Large in comparison to other models like GPT-4.

- Hopes for Open Access to New Models: Community sentiment expressing hopes for future open-access models while discussing skepticism tied to involvement with large tech firms like Microsoft.

Mistral Chat Discussions

The Mistral Chat channel on Discord covers various discussions related to Mistral's capabilities and functionalities. Users inquire about topics such as dynamic temperature sampling, local machine setup, GPU compatibility, fine-tuning data quantities, model quantization impact, Mistral's large model announcements, and more. The channel also addresses issues like freezing errors, model output coherence, serverless hosting options, and concerns about data normalization and precision levels. Overall, the Mistral Chat provides a platform for users to share experiences, ask questions, and explore the potential of Mistral in different applications.

LM Studio Discussions

LM Studio ▷ #🤖-models-discussion-chat (98 messages🔥🔥):

- Hyperparameter Evaluation Dilemma: @0xtotem inquired about evaluating RAG hyperparameters on own dataset or a similar one, citing it remains unresolved as a personal choice.

- Dolphin Model Dilemma: @yahir9023 shared struggles creating a Dolphin model prompt template in LM Studio due to Discord limitations.

- Model Memory Challenge: @mistershark_ discussed difficulty in keeping multiple large language models in VRAM, mentioning the need for significant hardware and sharing the GitHub link to ooba.

- Translation Model Inquiry: @goldensun3ds questioned best Japanese to English translation model, suggesting a potential Mixtral model and highlighting the need for powerful hardware setups.

- Mixed-Expert Models: @freethepublicdebt inquired about future models with different expert precisions, promoting generalization and GPU efficiency, receiving no response regarding their existence.

LM Studio ▷ #🧠-feedback (8 messages🔥):

- Fire Emoji for Fresh Update: @macfly praised the latest LM Studio update for its look and feel.

- Acknowledging Needed Fix: @yagilb assured an issue will be fixed and apologized for any inconvenience.

- High Praise for LM: @iandol praised LM's excellent GUI and user-friendly local server setup.

- Download Dilemma in China: @iandol reported difficulties downloading models in China due to proxy support limitations.

- Seeking Dolphin 2.7 Download Support: @mcg9523 faced challenges downloading Dolphin 2.7 in LM Studio, receiving advice to improve visibility.

LM Studio ▷ #🎛-hardware-discussion (178 messages🔥🔥):

- Quest for CUDA Support in AMD: Users discussed AMD's potential development of CUDA support and debated GPU choices for running LLM models.

- To NVLink or Not to NVLink: Participants explored pros and cons of NVLink for multi-GPU setups, considering performance and cost factors.

- Mac vs. Custom PC for Running LLMs: Users debated between Mac Studio and custom PC setups for AI model use, considering speed, cost, and ease of use.

- Troubleshooting PC Shutdowns During LLM Use: @666siegfried666 sought help with PC shutdowns during LM Studio use.

LM Studio ▷ #🧪-beta-releases-chat (27 messages🔥):

- **Celebrating

Usage and Comparisons of Perplexity AI Models

- Users in the 'sharing' channel of Perplexity AI are actively discussing various topics, including model reviews, discussions about PerplexityAI and ElevenLabs, and analyses of AI models like Mistral.

- There is curiosity about global events like the first US moon mission, Lenovo's transparent laptop, and Starshield in Taiwan.

- Tech enthusiasts are exploring model comparisons such as iPhone models and tech strategies like eigenlayer nodes.

- Individuals are leveraging Perplexity AI for personal learning and discovery, searching topics like American athletes.

- Miscellaneous interests in the channel range from mathematical calculations to personal statements.

HuggingFace Cool Finds

Deep Unsupervised Learning Course Spring 2024

- User @omrylcn shared a link to the Berkeley CS294-158 SP24 course on Deep Unsupervised Learning, covering Deep Generative Models and Self-Supervised Learning.

The Emergence of Large Action Models

- User @fernando_cejas highlighted Large Action Models (LAMs), an advanced AI system capable of performing human-like tasks in digital environments.

Introducing Galaxy AI with Accessible Models

- User @white_d3vil introduced the Galaxy AI platform offering free API access to various AI models including GPT-4, GPT-3.5, and Gemini-Pro, available for testing in projects on the site.

Exploring VLM Resolution Challenges and Solutions

- User @osanseviero recommended blog posts from HuggingFace discussing resolution challenges in vision-language models (VLMs) and a new approach to address this issue with demo and models available on the HuggingFace hub.

Scale AI's Rise in the Data Labeling Market

- User @valeriiakuka shared an article about Scale AI becoming one of the highest-valued companies in the data labeling market, marking its 8th anniversary.

HuggingFace NLP

Fine-Tuning Follies:

- User @jimmyfromanalytics is facing issues fine-tuning Flan T5 for generating positive and negative comments on a niche topic and seeks advice. The model is outputting incoherent sentences after fine-tuning, suggesting difficulty in prompt engineering.

BERT vs. LLM for Text Classification:

- User @arkalonman asks for sources comparing fine-tuning a larger LLM like Mistral 7B or Gemma 7B with a standard BERT variant for text classification. User @lavi_39761 advises that encoder models are more suited and efficient for classification purposes.

Puzzling Finetuning Failures:

- User @frosty04212 reports an issue with fine-tuning an already fine-tuned RoBERTa model for NER, encountering 0 and NaN loss values. The issue seems resolved after reinstalling the environment.

DeciLM Training Dilemmas:

- User @kingpoki is trying to train DeciLM 7b with qlora but encounters a performance warning related to embedding dimension not set to a multiple of 8. Users discuss possible reasons for the warning.

Whisper Project Queries:

- User @psilovechai is looking for a local project with an interface like Gradio to train and process transcribing audio files using Whisper. They receive suggestions for GitHub repositories that could offer a solution.

HuggingFace Diffusion Discussions

- @mfd000m is seeking advice on using the model akiyamasho/AnimeBackgroundGAN.

- @tmo97 mentions LM Studio triggering a query from @mfd000m.

- @alielfilali01 inquires about cross-language model finetuning.

- @khandelwaal.ankit faces difficulties fine-tuning the Qwen/Qwen1.5-0.5B model.

- @chad_in_the_house shares Japanese Stable Diffusion model card.

LlamaIndex General Chat

The section discusses various topics related to AI and machine learning, including misidentifying protagonists in literature, advancements in multimodal learning, tools for financial analysis, context management in large window LLMs, open-source text generation applications, and debunking claims about AI capabilities. The conversations touch on practical applications, challenges, and developments in the field.

Latent Space Announcements

Latent Space Announcements

In the Latent Space channel, there were various discussions taking place related to AI, models, and tools. Some highlighted topics included:

- T5 Paper Discussion Imminent: A session led by @bryanblackbee to discuss the T5 paper.

- AI in Action Event Kickoff: An event featuring @yikesawjeez and local models.

- Model Fine-Tuning with LoRAs: Discussions about deploying and stacking LoRAs for generative models.

- Community Milestone Celebration: Sharing a birthday message and celebration within the community.

Links mentioned included Discord for communication and engagement, Notion for note-taking, and tools like SDXL Lightning and ComfyUI for AI tasks.

OpenAccess AI Collective (axolotl) Discussion Highlights

OpenAccess AI Collective (axolotl) Discussion Highlights

- GPU-Powered Mystery: Discussion on issues faced with high loss and extended training times using Nvidia RTX 3090 graphics cards.

- Gotta Troubleshoot 'Em All: Members troubleshooting high loss during training and checkpoint failures.

- 300 Seconds of Slowness: Assistance requested for slow model merging and GPU non-utilization issues.

- Docker Dilemma: Docker errors encountered when running Axolotl, including GPU connection issues on Ubuntu.

- Newbie's Navigator Needed: Request for an easy-to-follow tutorial for beginners using Axolotl to fine-tune models.

For more details and links, refer to the full discussion.

CUDA MODE ▷ #ring-attention

Tweaking Attention for Speed:

User @zhuzilin96 implemented a zigzag_ring_flash_attn_varlen_qkvpacked_func, showing speed improvement. @iron_bound explained Flash Attention benefits for memory efficiency and training speed. @andreaskoepf led a discussion on maximizing RingAttention benefits for larger batch sizes.

Flash Attention Finessed:

@iron_bound shared an explanation about Flash Attention from Hugging Face's documentation, detailing its benefits.

In-Depth Optimization Discussions:

@w0rlord and @andreaskoepf discussed softmax base 2 tricks and flash attention function accuracy. A notebook regarding softmax_base2_trick was shared.

LangChain AI - Share Your Work

Build Custom Chatbots with Ease:

- @deadmanabir shared a guide on crafting personalized chatbots utilizing OpenAI, Qdrant DB, and Langchain JS/TS SDK. Further details available on Twitter.

Insights on AI in the Insurance Industry:

- @solo78 expressed interest in implementing AI in the finance function within the insurance sector.

Merlinn AI Empowers Engineers:

- @david1542 introduced Merlinn, a project aiding on-call engineers in incident investigations and troubleshooting, utilizing Langchain under the hood.

Langchain on Rust:

- @edartru shared Langchain-rust, enabling Rust developers to write programs with large language models. Source code available on GitHub.

Novel Resume Optimizer Launch:

- @eyeamansh developed an open-source resume optimizer using AI, successful in securing calls from tech giants like NVidia and AMD. The tool aims to reduce cost and effort and can be found on GitHub.

Fine-Tuning and Models Enhancements

Fine-Tuning with Full Documents or Extracts?

- User @pantsforbirds is achieving great results with 1-shot data extraction using gpt-4-turbo by embedding entire documents into the prompt. They seek advice on whether to embed full example documents or just relevant sections in their finetuning dataset for a more complicated extraction/classification task.

FireFunction V1 Sparks Interest

- User @sourya4 asked for top choices for function calling with open-weights models and shared a link to @lqiao's announcement about FireFunction V1. This new tool promises GPT-4-level structured output and decision-routing at higher speeds. Also, it offers open-weights availability and commercial usability with a supportive blog post.

Structured Output for Better Development

- The announcement from @lqiao introduced JSON mode and grammar mode for all language models, ensuring structured outputs and reducing time spent on system prompts. This was detailed in a second blog post.

Hackathon for Hands-on Experience

- User @yikesawjeez mentioned current preferred tools for function calling and flagged an upcoming hackathon focused on FireFunction as a potential game-changer in determining a new favorite.

External Links and Brought By Button

This section contains two hyperlinks. The first one leads to a Twitter account for Latent Space Pod, and the second one directs to a newsletter at latent.space. Additionally, the content is brought by Buttondown, which is described as the simplest method to initialize and expand a newsletter.

FAQ

Q: What is Mistral-Large and how does it compare to GPT4?

A: Mistral-Large is discussed in comparison to GPT4 with insights on the community's reception and its launch.

Q: What are the technical hurdles faced with Mistral-Small compared to Mixtral 8x7B?

A: The section discusses Mistral-Small's performance compared to Mixtral 8x7B and the technical challenges like deployment and fine-tuning.

Q: What criticisms did Jim Keller make about NVIDIA's CUDA architecture?

A: Jim Keller criticized NVIDIA's CUDA architecture for lacking elegance and being cobbled together.

Q: What is ZLUDA and how does it challenge NVIDIA's dominance?

A: ZLUDA enables CUDA code to run on AMD and Intel GPUs, challenging NVIDIA's dominance in the market.

Q: What discussions took place regarding GPU choices for AI applications?

A: The debates focused on GPU choices for AI, including the affordability of the 4060 ti and the suitability of different GPUs for AI applications.

Q: What were the key discussions surrounding Mistral models in the community?

A: Key discussions included Mistral's direction towards closed-weight models, benchmarks of Mistral models compared to others, and hopes for open access to new models.

Q: What are some of the user-driven design ideas highlighted in LM Studio?

A: LM Studio highlighted design ideas like hyperparameter evaluation, challenges in model memory, translation model inquiries, and mixed-expert models.

Q: What were the discussions around CUDA support in AMD among users?

A: Discussions included the potential development of CUDA support in AMD GPUs and the debate on GPU choices for running large language models.

Q: What are the community's concerns about Mistral's shift towards closed-weight models?

A: Community discussions covered concerns and speculations on Mistral's direction towards closed-weight models and the potential for open weight models in the future.

Q: What are the features and benefits of Flash Attention and its implications?

A: Users discussed the implementation of Flash Attention for memory efficiency and training speed, along with maximizing its benefits for larger batch sizes.

Q: What was highlighted in the discussions regarding large action models (LAMs)?

A: The emergence of Large Action Models (LAMs) was highlighted as an advanced AI system capable of performing human-like tasks in digital environments.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!