[AINews] Llama 3.1 Leaks: big bumps to 8B, minor bumps to 70b, and SOTA OSS 405b model • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Synthetic Data and Model Performance

Exciting Developments in Various AI Discord Communities

AI Collaboration Opportunities

Strategic Communication and Target Audience Differentiation

Nous Research AI Reasoning Tasks Master List

HuggingFace Community Discussions

Mojo 🔥 Nightly Updates

CUDA MODE Cool Links

CUDA MODE Beginner Messages

Challenges and Solutions in LM Studio

API Discussions and Tools

Eleuther gpt-neox-dev

Interconnects (Nathan Lambert)

LangChain AI - Share-Your-Work

Applications of LangChain in AI Projects

Challenges and Updates in Various AI Projects

Building with LLMs and BUD-E Voice Assistant

AI Twitter Recap

This section provides a recap of AI Twitter activities. Claude 3.5 Sonnet has completed all the recaps, selecting the best out of 4 runs. The section also highlights the GPT-4o Mini release and performance. It mentions the launch of GPT-4o Mini and provides a link for more information.

Synthetic Data and Model Performance

Synthetic Data and Model Performance

-

Surpassing Teachers: @_philschmid shared that the AI-MO team's winning dataset with a fine-tuned @Alibaba_Qwen 2 model approaches or surpasses GPT-4o and Claude 3.5 in math competitions, demonstrating the potential of synthetic datasets to enable models to outperform their teachers.

-

NuminaMath Datasets: @_lewtun introduced the NuminaMath datasets, the largest collection of ~1M math competition problem-solution pairs, which were used to win the 1st Progress Prize of the AI Math Olympiad. Models trained on NuminaMath achieve best-in-class performance among open weight models.

Exciting Developments in Various AI Discord Communities

This section highlights the latest discussions and updates from different AI Discord communities. From optimizing data handling in Mojo 🔥 Community Meeting #4 to recommending ComfyUI over Forge in Stability.ai Discord, each community shares valuable insights and experiences. Users explore topics like implementing Newton's Method for Float Literals in Mojo, anticipating Mojo GPU support this summer, and comparing Forge to Easy Diffusion. Additionally, developers share knowledge on using Latent mode for Regional Prompter and resolving VRAM compatibility issues. The section also covers discussions on SVD CUDA implementation, building with LLMs, and exploring FP16 vs FP32 performance on A100 in the CUDA MODE Discord channel. Further, topics like GPT-4.0 Mini's introduction in Perplexity AI Discord, YouTube's AI conversational tests, and OpenAI's advancements are discussed in respective channels. Moreover, updates on deep learning models, tools, and community interactions in OpenRouter, LM Studio, and Cohere platforms are shared. The Eleuther Discord community delves into topics like Nemotron-340B bounty, hypernetworks, and feature contamination in neural networks. Finally, insights into Meta AI's potential offerings, UltraChat's effectiveness, and Langfuse's performance are explored in the Interconnects and Latent Space Discord channels.

AI Collaboration Opportunities

Artist Aria, a 2D/3D artist, is seeking collaboration opportunities within the AI community to combine artistic creativity with technological advancements. They aim to contribute their artistic skills to projects that involve AI and machine learning, fostering a cross-disciplinary approach to innovation and creation.

Strategic Communication and Target Audience Differentiation

The MLOps @Chipro Discord channel saw discussions on clarifying target audience needs and formulating strategic communication approaches for engaging engineers, devrels, solution architects, and aspiring engineers. The need to tailor messages uniquely for each role to effectively convey product features and benefits was highlighted. The Discoresearch Discord section detailed tactical, operational, and strategic insights from practitioners building with LLMs, accompanied by a TLDR video for better understanding. The TLDR series, recommended by authors, provides actionable advice for LLM practitioners.

Nous Research AI Reasoning Tasks Master List

The discourse in the Nous Research AI reasoning tasks master list delved into various topics related to improving reasoning capabilities of Large Language Models (LLMs). The discussions included concepts like QuietStar inspiring auto-generating prompts, proposals for LLM's type system, the use of intermediate representations, refining task structures in Open-Reasoning-Tasks repository, and introducing various frameworks and tools for LLM reasoning enhancement. The community explored debates on integrating logic programming languages like Prolog and ProbLog into LLM tasks for enhanced reasoning abilities, emphasizing the importance of probabilistic reasoning and multi-agent systems in model development.

HuggingFace Community Discussions

The HuggingFace community discusses various topics including Knowledge Graphs, News Reading Experience, Model Kwargs on Hugging Face, and Speaker Diarization & Whisper Transcription. Users share experiences, ask questions, and provide feedback on different projects and initiatives within the community. The community also explores topics like Apple Intelligence, LoRA fine-tuning, AI papers on arXiv, AI's impact on the world, and free online courses. Mentions of new projects like SmolLM Arena, live music generation with Gary4live, research calls, and workshops on Large Language Models (LLMs) showcase the community's diverse interests and activities.

Mojo 🔥 Nightly Updates

The #nightly channel in Modular (Mojo 🔥) was abuzz with discussions on various topics including compiler updates, LegacyPointer and DTypePointer discussions, issues with the memcpy function, and changes in the Mojo API. Members engaged in detailed conversations about these subjects, sharing insights and seeking solutions to technical challenges.

CUDA MODE Cool Links

- CubeCL enables CUDA kernels in Rust: The CubeCL project introduces multi-platform high-performance compute language extension for Rust, using nvrtc for CUDA and a comptime system for kernel specialization. Discussion points include the limitations of borrow checking and pointers, with CubeCL's system still far from matching Zig's capabilities but useful for avoiding bounds checks and loop unrolling.

CUDA MODE Beginner Messages

- Triton Block Size Explained: A member clarified the BLOCK_SIZE concept in Triton, likening it to TILE_SIZE in CUDA, with discussion on num_warps controlling thread block size. Another member admitted initial confusion, discussing similarities and differences.

- NVCC Compiler and Variable-Length Arrays: Members debated the feasibility of using runtime-defined length arrays (VLAs) in the non-GPU part of the NVCC compiler, with one member explaining the C feature status of VLAs in C++ standards.

- FP16 Performance Considerations: Discussion centered on the unexpectedly lower FP16 performance in A100 compared to FP32, considering hardware architecture and overheads as factors.

- Understanding Triton Multi-Stage Pipelining: Queries arose on the purpose and specifics of pipelining stages in Triton, with members struggling to grasp the concept's significance beyond basic CPU architecture pipelining.

Challenges and Solutions in LM Studio

-

Quantization and VRAM Issues: Users discussed the non-quantized nature of 'f16' and experiences with models on limited VRAM.

-

LM Studio and Hugging Face API: LM Studio's search issues were linked to problems with the Hugging Face API, causing intermittent failures until resolution. Troubleshooting steps included VPN use and reinstalls.

-

Efficiently Running Large Models: Tips were shared on running large models locally by balancing VRAM and RAM, updating NVIDIA/CUDA drivers, and using tools like LM Studio.

-

Local LLMs for Privacy and Utility: Dialogues revolved around the benefits of local LLMs for maintaining data privacy and offline use, highlighting the utility focus over direct monetary gain.

API Discussions and Tools

This section covers discussions related to the OpenAI API, including the replacement of GPT-3.5 with GPT-4o Mini, features comparison between ChatGPT and API, and considerations for longform content generation. Members also explore the differences in voice integration and text-to-speech quality between the two platforms. The community expresses excitement for the GPT-4o Mini and speculates about the future advancements in AI technology. Additionally, tools such as Guidance AI for prompt engineering are discussed, focusing on improving ChatGPT responses and utilizing custom instructions effectively.

Eleuther gpt-neox-dev

This section covers discussions in the Eleuther gpt-neox-dev channel, including the offer of a bounty for converting Nemotron-340B, debates on Nemotron-340B's architecture uniqueness, feasibility of vLLM multinode inference, challenges of multi-node performance and testing, and nerdsniping along with integrating features into the evaluation harness.

Interconnects (Nathan Lambert)

Seeking blog post on distillation

- A member inquired if anyone has a favored blog post on distillation.

Surprise at lack of Lilian Wang's work

- Another member expressed surprise at the absence of a comprehensive Lilian Wang blog post on the topic, suggesting there isn't a 20k word post available.

LangChain AI - Share-Your-Work

Triplex slashes knowledge graph creation costs:

The Triplex model by SciPhi.AI has been open-sourced, reducing knowledge graph creation costs by 98% and outperforming GPT-4 at a fraction of the cost. Triplex is a finetuned version of Phi3-3.8B that extracts triplets from unstructured data, enhancing methods like Microsoft's Graph RAG.

Visualize vectors in NLP using graphs:

A GitHub project has been created to visualize vectors on a graph, aiding in easier interpretation of natural language processing outputs.

Applications of LangChain in AI Projects

This section discusses various AI projects leveraging LangChain technology. It includes a tutorial on Semantic Search using LangChain, Cohere LLM, and ApertureDB, an AI-powered function builder for TypeScript called AI Fun, and a guide for creating a Scheduler Agent using Composio, LangChain, and ChatGPT. The creation of a chat module using Cohere's Command R+ and the encouragement for feedback, the automation of TypeScript function building processes through AI, and the empowerment of users to manage tasks more efficiently with Scheduler Agents are highlighted. Additionally, there are links to detailed guides and blog posts related to these projects.

Challenges and Updates in Various AI Projects

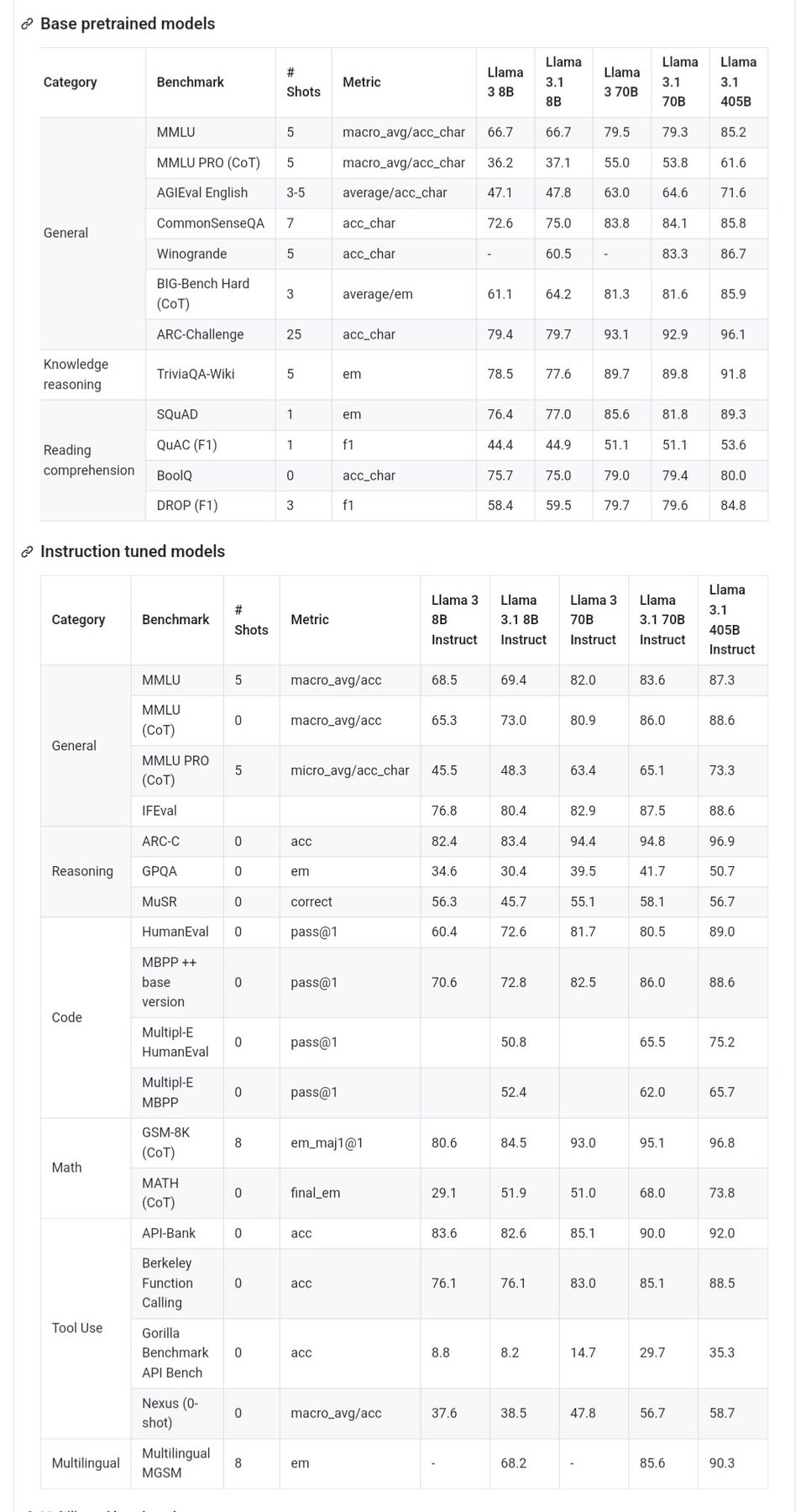

This section highlights some challenges and recent updates in different AI projects. For example, issues with the GPT4o-mini model impacting data extraction pipelines, the release of DSPy tracing feature for enhanced workflow, challenges with TypedPredictors and Complex Pydantic Classes, and the validation of joint optimization approach in DSPy paper. Additionally, discussions around the reliability of DSPy optimizers and the performance analysis of Deepseek model and bitcast functionality in Tinygrad are also covered. It also includes updates on projects like Pinokio's Augmentoolkit and Meta Llama 3.1, as well as inquiries and discussions in various AI-related channels.

Building with LLMs and BUD-E Voice Assistant

The section discusses insights from a year of building with LLMs, where a user shared a video and blog post summarizing tactical, operational, and strategic lessons. It also covers a demo of the open-source BUD-E voice assistant with an invitation to collaborate. Additionally, various links mentioned for further reference are provided.

FAQ

Q: What is the Triplex model by SciPhi.AI?

A: The Triplex model by SciPhi.AI is an open-sourced model that reduces knowledge graph creation costs by 98% and outperforms GPT-4 at a fraction of the cost. It is a finetuned version of Phi3-3.8B that extracts triplets from unstructured data.

Q: What are the key highlights of the NuminaMath datasets?

A: The NuminaMath datasets are the largest collection of ~1M math competition problem-solution pairs. Models trained on NuminaMath achieve best-in-class performance among open weight models and were used to win the 1st Progress Prize of the AI Math Olympiad.

Q: What is the purpose of CubeCL project?

A: The CubeCL project introduces multi-platform high-performance compute language extension for Rust, using nvrtc for CUDA and a comptime system for kernel specialization. It aims to enable CUDA kernels in Rust.

Q: What were some of the discussions in the Modular (Mojo 🔥) #nightly channel?

A: Discussions in the Modular (Mojo 🔥) #nightly channel included topics like compiler updates, LegacyPointer and DTypePointer discussions, issues with the memcpy function, and changes in the Mojo API. Members engaged in detailed conversations about technical challenges.

Q: What is the focus of the discourse in the Nous Research AI reasoning tasks master list?

A: The discourse in the Nous Research AI reasoning tasks master list delves into various topics related to improving reasoning capabilities of Large Language Models (LLMs). It includes discussions on concepts like QuietStar inspiring auto-generating prompts, proposals for LLM's type system, and integrating logic programming languages like Prolog and ProbLog into LLM tasks.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!